Chapter 2 – Hypermedia

J’irai où ton souffle nous mène

Dans les pays d’ivoire et d’ébène Khaled, Aïcha (1996)

Hypermedia plays a fundamental role on the Web. Unfortunately, in order to turn the Web into the global hypertext system it is today, several compromises had to be made. This chapter starts with a short history of hypermedia, moving on to its implementation on the Web and its subsequent conceptualization through REST. If we want machines to automatically perform tasks on the Web, enhancements are necessary. This observation will lead to the research questions guiding this doctoral dissertation.

In colloquial language, “the Internet” and “the Web” are treated synonymously. In reality, the Internet [9] refers to the international computer network, whereas the World Wide Web [5] is an information system, running on top of the Internet, that provides interconnected documents and services. While the information system is actually the Web, in practice, people usually refer to any action they perform online simply as “using the Internet”.

As many people have been introduced to both at the same time, it might indeed be unintuitive to distinguish between the two. An interesting way to understand the immense revolution the Web has brought upon the Internet is to look at the small time window in the early 1990s when the Internet had started spreading but the Web hadn’t yet.

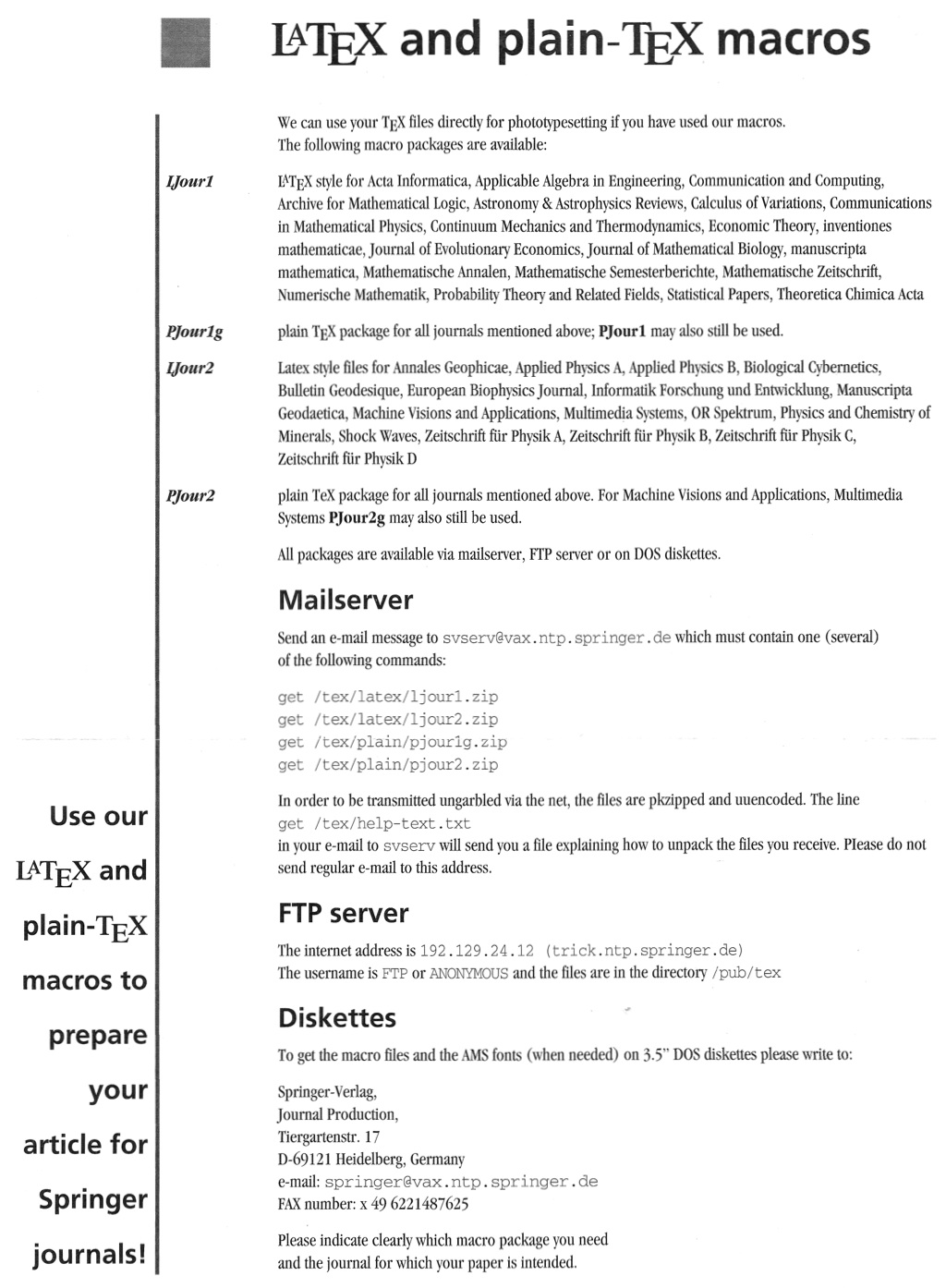

The flyer in Figure 1 dates from this period, and was targeted at people with a technical background who likely had Internet access. It instructs them to either send an e-mail with commands, or connect to a server and manually traverse a remote file system in order to obtain a set of documents. In contrast, the Web allows publishers to simply print an address that can be typed into a Web browser. This address could point to an information page that contains links to the needed documents. Nowadays, it is even common to have a machine-readable QR code on such a flyer, so devices like smartphones can follow the “paper link” autonomously.

Clearly, we’ve come a long way. This chapter will tell the history of hypermedia and the Web through today’s eyes, with a focus on what is still missing for current and future applications. These missing pieces form the basis for this dissertation’s research questions, which are formulated at the end of the chapter. The next section takes us back in time for a journey that surprisingly already starts in 1965—

A history of hypermedia

Theodor Holm Nelson (*1937) is a technology pioneer most known for Project Xanadu [23]. Even though a fully functional version has not been released to date, it inspired generations of hypertext research. When coining the term, he wrote “we’ve been speaking hypertext all our lives and never known it.” [16]

The first written mention of the word hypertext was by Ted Nelson in a 1965 article [15], where he had introduced it as “a body of written or pictorial material interconnected in such a complex way that it could not conveniently be presented or represented on paper.” In that same article, he mentioned hypermedia as a generalization of the concept to other media such as movies (consequently called hyperfilm). While this initial article was not very elaborate on the precise meaning of these terms, his infamous cult double-book “Computer Lib / Dream Machines” [16] to make them more clear. Later, in “Literary Machines” [17], he defined hypertext as “non-sequential writing—

It is important to realize that Nelson’s vision differs from the Web’s implementation of hypertext in various ways. He envisioned chunk-style hypertext with footnotes or labels offering choices that came to the screen as you clicked them, collateral hypertext to provide annotations to a text, stretchtext, where a continuously updating document could contain parts of other documents with a selectable level of detail, and grand hypertext, which would consist of everything written about a subject [16]. In particular, Nelson thought of much more flexible ways of interlinking documents, where links could be multi-directional and created by any party, as opposed to the uni-directional, publisher-driven links of the current Web. Information could also be intertwined with other pieces of content, which Nelson called transclusion.

The idea of interlinking documents even predates Nelson. Vannevar Bush wrote his famous article “As We May Think” in 1945,detailing a hypothetical device the memex [8], that enabled researchers to follow a complex trail of documents… on microfilm. That idea can in turn be traced back to Paul Otlet, who imagined a mesh of many electric telescopes already in 1934 [18]. While unquestionably brilliant, both works now read like anachronisms. They were onto something crucial, but the missing piece would only be invented a few decades later: the personal computer.

Although Nelson’s own hypertext platform Xanadu was never realized [23], other computer pioneers such as Doug Engelbart started to implement various kinds of hypertext software. By 1987, the field had sufficiently matured for an extensive survey, summarizing the then-existing hypertext systems [10]. Almost none of the discussed systems are still around today, but the concepts presented in the article sound familiar. The main difference with the Web is that all these early hypermedia systems were closed. They implemented hypermedia in the sense that they presented information on a screen that offered the user choices of where to go next. These choices, however, were limited to a local set of documents. In the article, Conklin defines the concept of hypertext as “windows on the screen associated with objects in a database” [10], indicating his presumption that there is indeed a single database containing all objects. Those systems are thus closed in the sense that they cannot cross the border of a single environment, and, as a consequence, also in the sense that they cannot access information from systems running different software.

As a result, hypertext systems were rather small: documentation, manuals, books, topical encyclopedias, personal knowledge bases, … In contrast, Nelson’s vision hinted at a global system, even though he did not have a working implementation by the end of the 1980s, when more than a dozen other hypertext systems were already in use. The focus of hypertext research at the time was on adding new features to existing systems. In hindsight, it seems ironic that researchers back then literally didn’t succeed in thinking “outside the box”.

The invention of the Web

His World Wide Web was only accepted as a demo in 1991 [4]. Yet at the 1993 Hypertext conference, all projects were somehow connected to the Web, as Tim Berners-Lee recalls.

Looking through the eyes of that time, it comes as no surprise that Tim Berners-Lee’s invention was not overly enthusiastically received by the 1991 Hypertext conference organizers [4]. The World Wide Web [5] looked very basic on screen (only text with links), whereas other systems showed interactive images and maps. But in the end, the global scalability of the Web turned out to be more important than the bells and whistles of its competitors. It quickly turned the Web into the most popular application of the Internet. Nearly all other hypertext research was halted, with several researchers switching to Web-related topics such as Web engineering or Semantic Web (Chapter 3). Remaining core hypermedia research is now almost exclusively carried out within the field of adaptive hypermedia (Chapter 6).

The Web’s components

The Web is not a single monolithic block, but rather a combination of three core components, each of which is discussed below.

- Uniform Resource Locator (URL)

-

A URL [7] has double functionality. URLs start with a protocol (

httporhttps), followed by a domain name to identify the server and a path to identify the resource on the server. On the one hand, it uniquely identifies a resource, just like a national identification number identifies a person. On the other hand, it also locates a resource, like a street address allows to locate a person. However, note that both functions are clearly distinct: a national identification number doesn’t tell you where a person lives, and a street address doesn’t always uniquely point to a single person. URLs provide both identification and location at the same time, because they are structured in a special way. The domain name part of a URL allows the browser to locate the server on the Internet, and the path part gives the server-specific name of the resource. Together, these parts uniquely identify—and locate— each resource on the Internet. - Hypertext Transfer Protocol (HTTP)

-

All HTTP requests and responses contain metadata in standardized headers. For instance, a client can indicate its version, and a server can specify the resource’s creation date. Web clients and servers communicate through the standardized protocol HTTP [13]. This protocol has a simple request/

response message paradigm. Each HTTP request consists of the method name of the requested action and the URL to the resource that is the subject of this action. The set of possible methods is limited, and each method has highly specific semantics. A client asks for a representation of a resource (or a manipulation thereof), and the server sends an HTTP response back in which the representation is enclosed. - Hypertext Markup Language (HTML)

-

Finally, the Web also needs a language to mark up hypertext, which is HTML [3]. Although HTML is only one possible representation format—

as any document type can be transported by HTTP— its native support for several hypermedia controls [1] makes it an excellent choice for Web documents. HTML documents can contain links to other resources, which are identified by their URL. Upon activation of the link, the browser dereferences the URL by locating, downloading, and displaying the document.

A URL identifies a resource in an HTTP request, which returns an HTML representation that links to other resources through URLs.

These three components are strongly interconnected. URLs are the identification and location mechanism used by HTTP to manipulate resources and to retrieve their representations. Many resources have an HTML representation (or another format with hypermedia support) that in turn contains references to other resources through their URL.

The Web’s architectural principles

Like many good inventions, the Web somehow happened by accident. That’s not to say that Berners-Lee did not deliberately design URLs, HTTP, and HTML as they are—

HTTP, the protocol of the Web, is not the only implementation of REST. And unfortunately, not every HTTP application necessarily conforms to all REST constraints. Yet, full adherence to these constraints is necessary in order to inherit all desirable properties of the REST architectural style.

Fielding devises REST by starting from a system without defined boundaries, iteratively adding constraints to induce desired properties. In particular, there’s a focus on the properties scalability, allowing the Web to grow without negative impact on any of the involved actors, and independent evolution of client and server, ensuring interactions between components continue to work even when changes occur on either side. Some constraints implement widely understood concepts, such as the client–server constraints and the cache constraints, which won’t be discussed further here. Two constraints are especially unique to REST (and thus the Web), and will play an important role in the remainder of this thesis: the statelessness constraint and the uniform interface constraints.

The statelessness constraint

When a client is sending “give me the next page”, the interaction is stateful, because the server needs the previous message to understand what page it should serve. In contrast, “give me the third page of search results for ‘apple’” is stateless because it is fully self-explanatory—

REST adds the constraint that the client–server interaction must be stateless, thereby inducing the properties of visibility, reliability, and scalability [11]. This means that every request to the server must contain all necessary information to process it, so its understanding does not depend on previously sent messages. This constraint is often loosely paraphrased as “the server doesn’t keep state,” seemingly implying that the client can only perform read-only operations. Yet, we all know that the Web does supports many different kinds of write operations: servers do remember our username and profile, and let us add content such as text, images, and video. Somehow, there exists indeed a kind of state that is stored by the server, even though this constraint seems to suggest the contrary. This incongruity is resolved by differentiating between two kinds of state: resource state and application state [19]. Only the former is kept on the server, while the latter resides inside the message body (and partly at the client).

The idea behind REST is to define resources at the application’s domain level. This means that technological artefacts such as “a service” or “a message” are not resources of a book store application. Instead, likely resource candidates are “book”, “user profile”, and “shopping basket”.

Before we explain the difference, we must first obtain an understanding of what exactly constitutes a resource. Resources are the fundamental unit for information in REST. Broadly speaking, “any information that can be named can be a resource” [11]. In practice, the resources of a particular Web application are the conceptual pieces of information exposed by its server. Note the word “conceptual” here; resources identify constant concepts instead of a concrete value that represents a concept at a particular point in time. For instance, the resource “today’s weather” corresponds to a different value every day, but the way of mapping the concept to the value remains constant. A resource is thus never equal to its value; “today’s weather” is different from an HTML page that details this weather. For the same reason, “The weather on February 28th, 2014” and “today’s weather” are distinct concepts and thus different resources—

In a book store, resource state would be the current contents of the shopping basket, name and address of the user, and the items she has bought.

Resource state, by consequence, is thus the combined state of all different resources of an application. This state is stored by the server and thus not the subject of the statelessness constraint. Given sufficient access privileges, the client can view and/

The book the user is consulting and the credentials with which she’s signed in are two typical examples of application state.

Application state, in contrast, describes where the client is in the interaction: what resource it is currently viewing, what software it is using, what links it has at its disposition, … It is not the server’s responsibility to store this. As soon as a request has been answered, the server should not remember it has been made. This is what makes the interaction scalable: no matter how many clients are interacting with the server, each of them is responsible for maintaining its own application state. When making a request, the client sends the relevant application state along. Part of this is encoded as metadata of each request (for example, HTTP headers with the browser version); another part is implicitly present through the resource being requested.

A back button that doesn’t allow you to go to your previous steps is an indication the server maintains the application state, in violation of the statelessness constraint.

For instance, if the client requests the fourth page of a listing, the client must have been in a state where this fourth page was accessible, such as the third page. By making the request for the fourth page, the server is briefly reminded of the relevant application state, constructs a response that it sends to the client, and then forgets the state again. The client receives the new state and can now continue from there. The uniform interface, which is the next constraint we’ll discuss, provides the means of achieving statelessness in REST architectures.

The uniform interface constraints

The central distinguishing feature of the REST architectural style is its emphasis on the uniform interface, consisting of four constraints, which are discussed below. Together, they provide simplification, visibility, and independent evolution [11].

Identification of resources

In some Web applications, we can see actions such as addComment as the target of a hyperlink. However, these are not resources according to the definition: an “addComment” is not a concept. As an unfortunate consequence, their presence thus breaks compatibility with REST. Since a resource is the fundamental unit of information, each resource should be uniquely identifiable so it can become the target of a hyperlink. We can also turn this around: any indivisible piece of information that can (or should) be identified in a unique way is one of the application’s resources. Since resources are conceptual, things that cannot be digitized (such as persons or real-world objects) can also be part of the application domain—

Each resource can be identified by several identifiers, but each identifier must not point to more than one resource. On the Web, the role of unique identifiers is fulfilled by URLs, which identify resources and allow HTTP to locate and interact with them.

Manipulation of resources through representations

The common human-readable representation formats on the Web are HTML and plaintext. For machines, JSON, XML, and RDF can be found, as well as many binary formats such as JPEG and PNG. Clients never access resources directly in REST systems; all interactions happen through representations. A representation represents the state of a resource—

Self-descriptive messages

Out-of-band information is often found in software applications or libraries, an example being human-readable documentation. It increases the difficulty for clients to interoperate with those applications. Media types are only part of the solution. Messages exchanged between clients and servers in REST systems should not require previously sent or out-of-band information for interpretation. One of the aspects of this is statelessness, which we discussed before. Indeed, messages can only be self-descriptive if they do not rely on other messages. In addition, HTTP also features standard methods with well-defined semantics (GET, POST, PUT, DELETE, …) that have properties such as safeness or idempotence [13]. However, in Chapter 3, we’ll discuss when and how to attach more specific semantics to the methods in those cases where the HTTP specification deliberately leaves options open.

Hypermedia as the engine of application state

Page 3 of a search result is retrieved by following a link, not by constructing a new navigation request from scratch. The fourth and final constraint of the uniform interface is that hypermedia must be the engine of application state. In REST Web applications, clients advance the application state by activating hypermedia controls. It is sometimes referred to by its HATEOAS acronym; we will use the term “hypermedia constraint”. From the statelessness constraint, we recall that application state describes the position of the client in the interaction. The present constraint demands that the interaction be driven by information inside server-sent hypermedia representations [12] rather than out-of-band information, such as documentation or a list of steps, which would be the case for Remote Procedure Call (RPC) interactions [20]. Concretely, REST systems must offer hypermedia representations that contain the controls that allow the client to proceed to next steps. In HTML representations, these controls include links, buttons, and forms; other media types offer different controls [1].

Many—

With the history of hypermedia in mind, this constraint seems very natural, but it is crucial to realize its importance and necessity, since the Web only has publisher-driven, one-directional links. When we visit a webpage, we indeed expect the links to next steps to be there: an online store leads to product pages, a product page leads to product details, and this page in turn allows to order the product. However, we’ve all been in the situation where the link we needed wasn’t present. For instance, somebody mentions a product on her homepage, but there is no link to buy it. Since Web linking is unidirectional, there is no way for the store to offer a link from the homepage to the product, and hence, no way for the user to complete the interaction in a hypermedia-driven way. Therefore, the presence of hypermedia controls is important.

While humans excel in finding alternative ways to reach a goal (for instance, entering the product name in a search engine and then clicking through), machine clients do not. These machine clients are generally pieces of software that aim to bring additional functionality to an application by interacting with a third-party Web application, often called a Web API (Application Programming Interface) in that context. According to the REST constraints, separate resources for machines shouldn’t exist, only different representations. Machines thus access the same resources through the same URLs as humans. In practice, many representations for machine clients unfortunately do not contain hypermedia controls. As machines have no flexible coping strategies, they have to be rigidly preprogrammed to interact with Web APIs in which hypermedia is not the engine of application state. If we want machines to be flexible, the presence of hypermedia controls is a necessity, surprisingly even more than for human-only hypertext.

Hypermedia on the Web

Fielding coined his definition of hypertext only in April 2008, several years after the derivation of REST, in a talk titled “A little REST and relaxation”.

Yet, its significance is important.

Fielding’s definition of hypertext [11] (and by extension, hypermedia) guides us to an understanding of the role of hypermedia on the Web:

When I say hypertext, I mean the simultaneous presentation of information and controls such that the information becomes the affordance through which the user (or automaton) obtains choices and selects actions. Roy Thomas Fielding

As this definition is very information-dense, we will interpret the different parts in more detail. First, the definition mentions the simultaneous presentation of information and controls. This hints at the use of formats that intrinsically support hypermedia controls, such as HTML, where the presentation of the information is necessarily simultaneous with the controls because they are intertwined with each other. However, intertwining is not a strict requirement; what matters is that the client has access to the information and to the controls that drive the application state at the same time.

Second, by their presence, these controls transform the information into an affordance. As the precise meaning and significance of the term affordance will be clarified in Chapter 6, it suffices here to say that the controls make the information actionable: what previously was only text now provides its own interaction possibilities.

Third, these interaction possibilities allow humans and machine clients to choose and select actions. This conveys the notion of Nelson’s definition that the text should allow choices to the reader on an interactive screen [17]. Additionally, it refers to the hypermedia constraint, which demands the information contains the controls that allow the choice and selection of next steps.

Nowadays, most machine clients have been preprogrammed for interaction with a limited subset of Web APIs. However, I do expect this to change in the future—

Note how the definition explicitly includes machine clients. As we said before, the REST architecture offers similar controls (or affordances) to humans and machines, both of which use hypermedia. We can distinguish three kinds of machine clients. A Web browser is operated by a human to display hypermedia, and it can enhance the browsing experience based on a representation’s content. An API client is a preprogrammed part of a software application, designed to interact with a specific Web API. An autonomous agent [6] is capable of interacting with several Web APIs in order to perform complex tasks, without explicitly being programmed to do so.

Research questions

The fact that the publishers offer links to a client makes it easier for this client to continue the interaction, but at the same time puts a severe constraint on those publishers, who won’t be able to give every client exactly what it needs.

If we bring together Fielding’s definition of hypertext, the hypermedia constraint, and the publisher-driven, unidirectional linking model of the Web, an important issue arises. The same decisions that lead to the scalability of the Web are those that make it very hard to realize the hypermedia constraint.

Any hypermedia representation must contain the links to next steps, yet how can the publisher of information, responsible for creating this representation, know or predict what the next steps of the client will be? It’s not because the publisher is the client’s preferred party to provide the information, that it is also the best party to provide the controls to interact with this information [21, 22]. Even if it were, the next steps differ from client to client, so a degree of personalization is involved—

Given the current properties of the Web, hypermedia can only be the engine of application state in as far as the publisher is able to provide all necessary links. While this might be the case for links that lead toward the publisher’s own website, this is certainly not possible on the open Web with an ever growing number of resources. The central research question in this thesis is therefore:

How can we automatically offer human and machine clients the hypermedia controls they require to complete tasks of their choice?

An answer to this research question will eventually be explained in Chapter 6, but we need to tackle another issue first. After all, while humans generally understand what to do with hypermedia links, merely sending controls to a machine client is not sufficient. This client will need to interpret how to make a choice between different controls and what effect the activation of a certain control will have in order to decide whether this helps to reach a certain goal. The second research question captures this problem:

How can machine clients use Web APIs in a more autonomous way, with a minimum of out-of-band information?

Chapter 4 and Chapter 5 will explore a possible answer to this question, which will involve semantic technologies, introduced in Chapter 3. Finally, I want to explore the possibilities that the combination of semantics and hypermedia brings for Web applications:

How can semantic hypermedia improve the serendipitous reuse of data and applications on the Web?

This question will be the topic of Chapter 7, and is meant to inspire future research. As I will explain there, many new possibilities reside at the crossroads of hypermedia and semantics.

In this chapter, we looked at the Web from the hypermedia perspective, starting with the early hypertext systems and how the Web differs from them. Through the REST architectural style, a formalization of distributed hypermedia systems, we identified a fundamental problem of the hypermedia constraint: publishers are responsible for providing controls, without knowing the intent of the client or user who will need those controls. Furthermore, hypermedia controls alone are not sufficient for automated agents; they must be able to interpret what function the controls offer. I will address these problems by combining hypermedia and semantic technologies.

References

- [1]

- . Hypermedia types. In: Erik Wilde and Cesare Pautasso, editors, REST: From Research to Practice, pages 93–116. Springer, 2011.

- [2]

- . Hypermedia APIs with HTML5 and Node. O’Reilly, December 2011.

- [3]

- . HTML5 – a vocabulary and associated APIs for HTML and XHTML. Candidate Recommendation. World Wide Web Consortium, December 2012.

- [4]

- . Weaving the Web: The original design and ultimate destiny of the World Wide Web by its inventor. HarperCollins Publishers, September 1999.

- [5]

- ois Groff. The world-wide web. Computer Networks and ISDN Systems, 25(4–5): 454–459, 1992.

- [6]

- . The Semantic Web. Scientific American, 284(5): 34–43, May 2001.

- [7]

- . Uniform Resource Locators (URL). Request For Comments 1738. Internet Engineering Task Force, December 1994.

- [8]

- . As we may think. The Atlantic Monthly, 176(1): 101–108, July 1945.

- [9]

- . Specification of Internet transmission control program. Request For Comments 675. Internet Engineering Task Force, December 1974.

- [10]

- . Hypertext: an introduction and survey. Computer, 20(9): 17–41, September 1987.

- [11]

- . Architectural styles and the design of network-based software architectures. PhD thesis. University of California, 2000.

- [12]

- . REST APIs must be hypertext-driven. Untangled – Musings of Roy T. Fielding. October 2008.

- [13]

- . Hypertext Transfer Protocol (HTTP). Request For Comments 2616. Internet Engineering Task Force, June 1999.

- [14]

- . Principled design of the modern Web architecture. Transactions on Internet Technology, 2(2): 115–150, May 2002.

- [15]

- . Complex information processing: a file structure for the complex, the changing and the indeterminate. Proceedings of the ACM 20th National Conference, pages 84–100, 1965.

- [16]

- . Computer Lib / Dream Machines. self-published, 1974.

- [17]

- . Literary Machines. Mindful Press, 1980.

- [18]

- . Trait de documentation : Le livre sur le livre, théorie et pratique. Editiones Mundaneum, Brussels, Belgium, 1934.

- [19]

- . RESTful Web Services. O’Reilly, May 2007.

- [20]

- . Programming Web Services with XML-RPC. O’Reilly, June 2001.

- [21]

- . Distributed affordance: an open-world assumption for hypermedia. Proceedings of the 4th International Workshop on RESTful Design. WWW 2013 Companion, pages 1399–1406, May 2013.

- [22]

- . Semantic technologies as enabler for distributed adaptive hyperlink generation. June 2013.

- [23]

- . The curse of Xanadu. Wired, 3(6), June 1995.

![[Profile picture of Ruben Verborgh]](/images/ruben.jpg)