Querying history with Linked Data

Combining Triple Pattern Fragments with Memento to query previous dataset versions in the browser.

Data on the World Wide Web changes at the speed of light—

When I pitch the Triple Pattern Fragments interface for Linked Data, Semantic Webbers often react with skepticism. Can such a simple interface be useful in the real world? After we’ve shown it scales pretty well for servers, and yields surprising results in federated scenarios, it’s time for a new challenge. Given that data inevitably changes, we wondered whether we could bring historical data back to life by making older versions of datasets as easy to query as new ones. To make this happen, my colleague Miel Vander Sande worked together with Herbert Van de Sompel, Harihar Shankar and Lyudmila Balakireva of the Los Alamos National Laboratory to combine Triple Pattern Fragments with the Memento framework on the Web.

A deceptively simple interface lets us answer questions through the eyes of time.

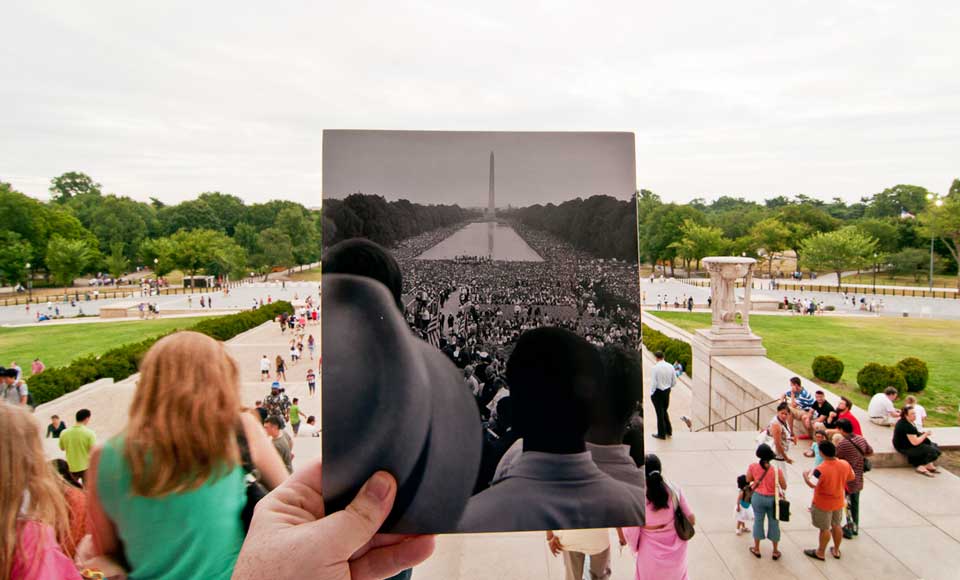

©Jason PowellMemento for time-based access to representations

Memento is a framework (RFC7089) that implements time-based content negotiation on top of the HTTP protocol. If you’re familiar with HTTP and the REST uniform interface constraints, you know that a resource can have multiple representations. For instance, a fact sheet about the Washington Monument could have HTML, JSON, and RDF representations, and these could be in English, French, and other languages. Yet all of them would be the same resource: regardless of representation, they all would be the fact sheet about the monument.

Through a processes called content negotiation, client and server agree which representation of the resource is the best fit for the situation. The most common implementation over HTTP involves the client indicating its preferences, for instance, through the Accept and Accept-Language headers. Then, the server tries to match the client’s preferences by searching among the possible representations. In its response, the server explicitly indicates the properties of the representation, for instance, through the Content-Type and Content-Language headers.

While the HTTP specification itself defines a limited number of headers to indicate such preferences, content negotiation can happen in many more dimensions. One of these dimensions is time—and the Memento specification provides the HTTP headers and mechanisms to negotiate representations based on a preferred timestamp. The client indicates its preference for a certain version with the Accept-Datetime header, and the server dates the time-specific representation—Memento-Datetime header.

An original resource can have multiple historical versions. It either performs time-based content negotiation between those versions itself, or delegates this task to a timegate.

In the Web archive world, external archiving is a common scenario. Therefore, negotiation can optionally happen through an auxiliary resource called a timegate. It lets third parties archive public resources even without access to their underlying server. In addition, the Memento framework defines a number of link relation types so resources, mementos, and timegates can refer to each other. This allows clients to discover, for example, where it can find different historical versions of a resource.

Applying Memento to Triple Pattern Fragments

Archiving a collection of resources thus involves providing historical representations of each of those resources through time-based content negotiation (possibly through a timegate). In the case of Linked Data documents, this would mean keeping multiple versions per subject of a dataset, which is quite easy to scale. However, in the case of a SPARQL endpoint, archiving would mean offering multiple versions per query, which is an infinite set of resources that cannot be pre-computed. A solution there would be to have one SPARQL endpoint (or one graph) per version, but this scales far worse.

With Triple Pattern Fragments, we need multiple versions per fragment. While this is a significantly larger number of resources compared to Linked Data Documents, each dataset only has a finite number of (non-empty) Triple Pattern Fragments. Furthermore, generating a TPF resource only requires minimal server cost. Complex SPARQL queries are evaluated by clients, who indicate with Accept-Datetime what version they prefer. Those clients thus combine historical fragments into a historical query result.

Each Triple Pattern Fragment of DBpedia is available at different points in time through time-based content negotiation.

We applied Memento to the public DBpedia fragments interface, so historical versions of DBpedia can now be queried live on the public Web. Here’s how we set it up:

- The server at fragments.dbpedia.org hosts the latest version(s) of DBpedia.

- The server at fragments.mementodepot.org hosts archived versions of DBpedia.

- A timegate resides at fragments.mementodepot.org.

- Its original resource is the unversioned English DBpedia TPF interface.

The latest and archived versions of DBpedia are provided through the HDT compressed triple format, which the Node.js server of Triple Pattern Fragments transforms into HTTP responses. As usual, regular HTTP caching can be applied, as long as the Vary header lists Accept-Datetime to indicate that representations are time-sensitive.

Preparing the query client

Since SPARQL queries against TPF interfaces are evaluated by the client, we need to update the client to support query evaluation over a Memento-enabled interface. Perhaps surprisingly, this was fairly trivial to implement.

First, since content negotiation happens transparently, existing clients don’t need to change and won’t notice anything special about the TPF interface. They will simply continue to query the version whose URL they are provided with.

Second, TPF clients build on top of the Web, including the HTTP protocol and hypermedia principles, so we merely needed to update the HTTP layer with Memento support. This diagram indicates the relevant components:

Only the HTTP layer of the TPF client needed to be changed. The fragment access layer remains unmodified as it simply follows hypermedia controls.

Broadly speaking, there are two options for implementing support in clients. One options is to negotiate every resource we access. For example, we negotiate the fragments “people in DBpedia” and “labels in DBpedia” independently (even though they are part of the same versioned interface). An alternative option is to negotiate on a single fragment, and subsequently use its hypermedia controls to retrieve data from the same interface. Since we did not change the hypermedia layer, the client defaults to the second option, following the hypermedia form on the first negotiated fragment it receives. (Independently from this, the HTTP layer will still add the Accept-Datetime header to every outgoing request, but since all subsequent responses will be already negotiated mementos, it doesn’t have an effect anymore.)

Cross-origin issues in the browser

All of this worked fine with the command-line version of the client, but we ran into CORS trouble within the browser. After all, the client is often located on a different domain (for example, client.linkeddatafragments.org) than the datasets it accesses (for example, fragments.dbpedia.org). You might be tempted to think that setting the Access-Control-Allow-Origin header on responses is sufficient, as this usually solves cross-origin problems. But in this case, we need more.

Performing time-based content negotiation requires sending a non-simple request header, namely Accept-Datetime. This means the browser will first ask for permission to use this header using a preflight OPTIONS request. Only if the server replies that this header is allowed (and also allows reading response headers we need), the client script can proceed to make the actual GET request. So we configured the server to reply to OPTIONS requests with the right headers.

Yet even then, we were not able to proceed the way we wanted. Because essentially, there are two ways to perform content negotiation: either you redirect with a 302 to the negotiated representation, or you directly serve that representation with a 200 (indicating the representation’s URL with Content-Location). While we initially opted for 302-style negotiation, this turned out to be incompatible with the CORS spec. CORS states that, if a preflight request is needed (in our case it is, because of the non-simple request header), then redirection responses are not allowed. Hence, we had to switch the server to 200-style negotiation, which is not always the best choice, but the only option that would also work with CORS.

Interestingly, we did not need to change the client implementation for this. As such, the (command-line version of) the client still supports 200- and 302-style negotiation. The HTTP layer simply follows redirects transparently, and the hypermedia layer uses the controls of whatever response it receives. So you can build your own server implementation the way you like; just be aware that, with the current CORS spec, cross‑origin browser clients cannot handle 302 redirects if they use custom headers.

Query through time in your browser

Now that we’ve established how the server and client work, it’s high time to try out a real-world SPARQL query. We chose this one:

SELECT ?name ?deathDate WHERE {

?person a dbpedia-owl:Artist;

rdfs:label ?name;

dbpedia-owl:birthPlace [ rdfs:label "York"@en ].

FILTER LANGMATCHES(LANG(?name), "EN")

OPTIONAL { ?person dbpprop:dateOfDeath ?deathDate. }

}

This query selects artists born in cities named “York”, and—OPTIONAL keyword is important here: without it, a date of death would be mandatory, so only deceased artists would be selected.

Let’s try this query over different versions of DBpedia.

- Query DBpedia in 2010

- Query DBpedia in 2012

- Query DBpedia in 2016

- (try your own by adjusting the date in the GUI)

In the execution log, you can see different versioned interfaces are used depending on the date you choose. We notice 3 patterns over time:

- The set of artists changes over time (mostly growing).

- More details are added to people who died some time ago.

- People who recently died get a death date.

Not only does this provide insights about a changing world, it also reveals how DBpedia itself evolves. Try changing the query to obtain this data for different cities, or try some of the example queries—

With Triple Pattern Fragments and Memento, we bring old Linked Data to life using a simple Web API. Because the interface for the server is cheap, you can do the same with your dataset. Follow the instructions to set up your own server—

PS: Just imagine how interesting things get if we combine this with federated querying.

![[Profile picture of Ruben Verborgh]](/images/ruben.jpg)